Run in Google Colab Run in Google Colab

|

View source on GitHub View source on GitHub

|

|

Step 3: Using SAM 2#

Segment Anything 2 (SAM 2) is a powerful segmentation model released in July 2024 that pushes the boundaries of image and video segmentation. It brings new capabilities to computer vision applications, including the ability to generate precise masks and track objects across frames in videos using just simple prompts.

In this notebook, you’ll learn how to:

Understand the key innovations in SAM 2

Apply SAM 2 to image datasets using bounding boxes, keypoints, or no prompts at all

Leverage SAM 2’s video segmentation and mask tracking capabilities with a single-frame prompt

What is SAM 2?#

SAM 2 is the next generation of the Segment Anything Model, originally introduced by Meta in 2023. While SAM was designed for zero-shot segmentation on still images, SAM 2 adds robust video segmentation and tracking capabilities. With just a bounding box or a set of keypoints on a single frame, SAM 2 can segment and track objects across entire video sequences.

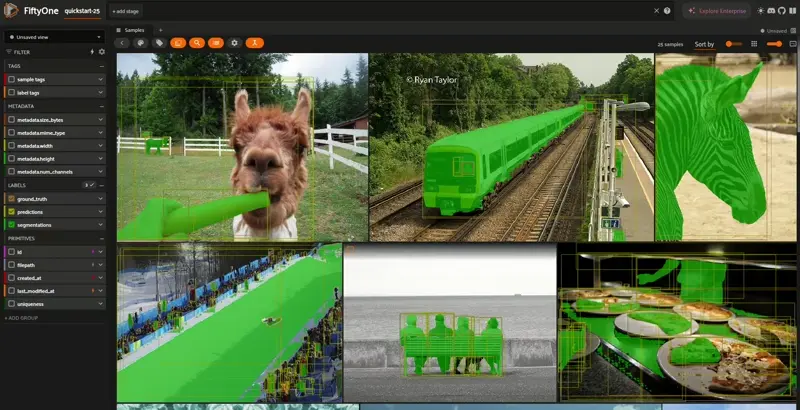

Using SAM 2 for Images#

SAM 2 integrates directly with the FiftyOne Model Zoo, allowing you to apply segmentation to image datasets with minimal code. Whether you’re working with ground truth bounding boxes, keypoints, or want to explore automatic mask generation, FiftyOne makes the process seamless.

[ ]:

import fiftyone as fo

import fiftyone.zoo as foz

# Load dataset

dataset = foz.load_zoo_dataset("quickstart", max_samples=25, shuffle=True, seed=51)

# Load SAM 2 image model

model = foz.load_zoo_model("segment-anything-2-hiera-tiny-image-torch")

# Prompt with bounding boxes

dataset.apply_model(model, label_field="segmentations", prompt_field="ground_truth")

# Launch app to view segmentations

session = fo.launch_app(dataset)

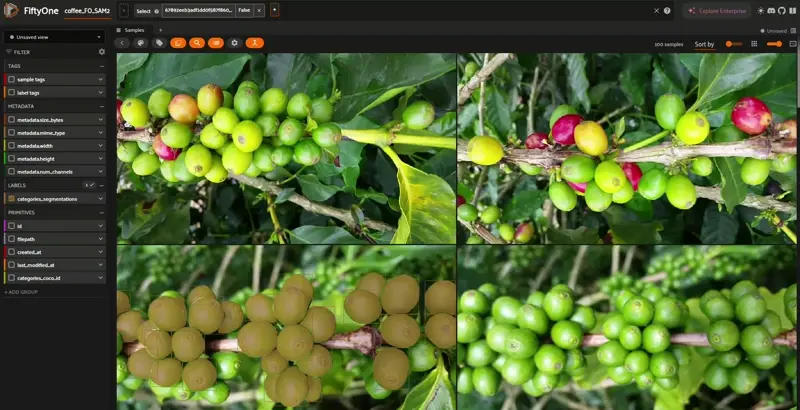

Using a custom segmentation dataset#

We will use a segmenation dataset with coffee beans, this is a FiftyOne Dataset. pjramg/my_colombian_coffe_FO

[ ]:

import fiftyone as fo # base library and app

import fiftyone.utils.huggingface as fouh # Hugging Face integration

dataset_ = fouh.load_from_hub("pjramg/my_colombian_coffe_FO", persistent=True, overwrite=True)

# Define the new dataset name

dataset_name = "coffee_FO_SAM2"

# Check if the dataset exists

if dataset_name in fo.list_datasets():

print(f"Dataset '{dataset_name}' exists. Loading...")

dataset = fo.load_dataset(dataset_name)

else:

print(f"Dataset '{dataset_name}' does not exist. Creating a new one...")

# Clone the dataset with a new name and make it persistent

dataset = dataset_.clone(dataset_name, persistent=True)

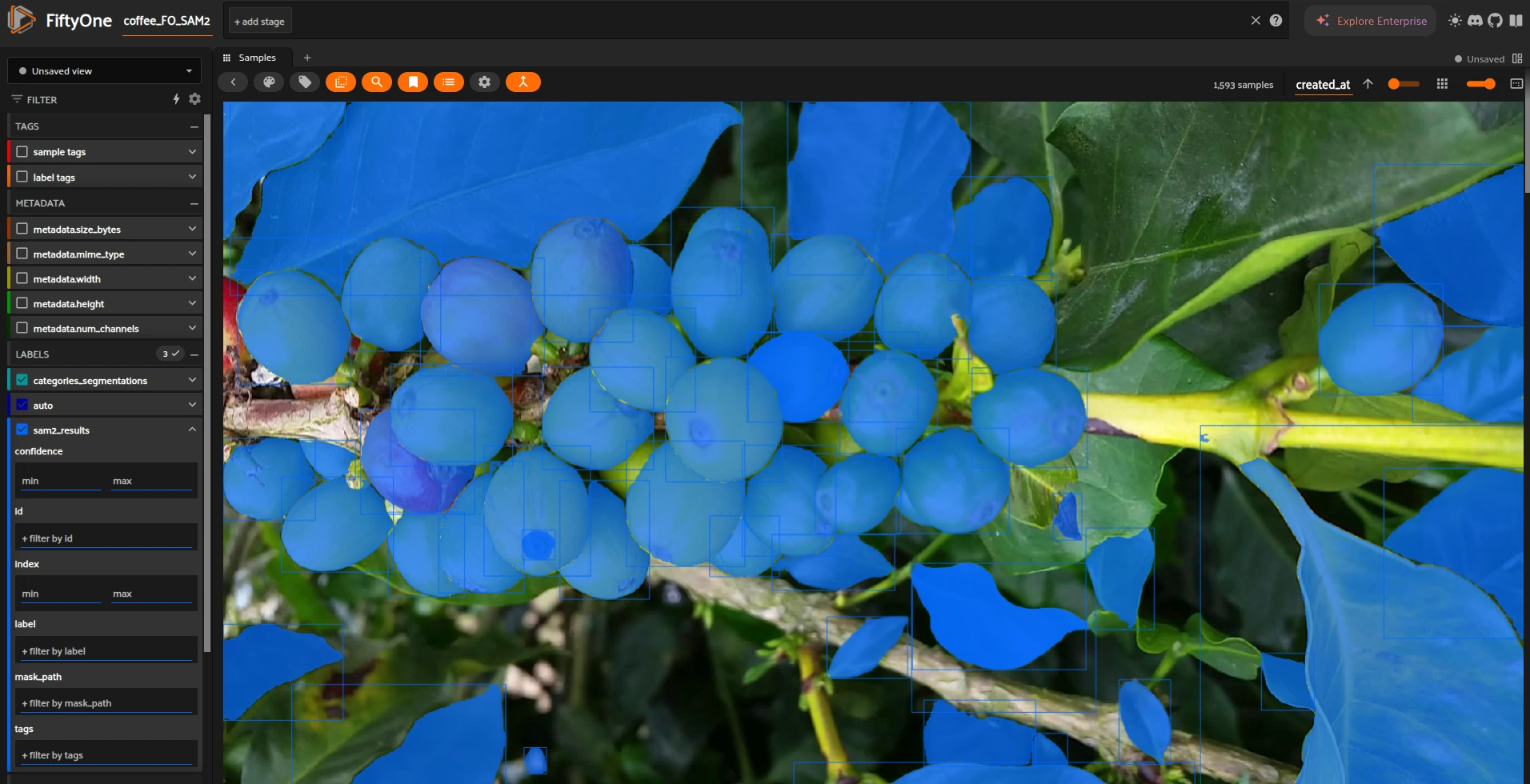

Prompting with ground truth information in the 100 unique samples in the dataset#

[ ]:

import fiftyone.brain as fob

results = fob.compute_similarity(dataset, brain_key="img_sim2")

results.find_unique(100)

[ ]:

unique_view = dataset.select(results.unique_ids)

session.view = unique_view

Apply SAM2 just the 100 unique samples#

SAM 2 can also segment entire images without needing any bounding boxes or keypoints. This zero-input mode is useful for generating segmentation masks for general visual analysis or bootstrapping annotation workflows.

[ ]:

import fiftyone.zoo as foz

model = foz.load_zoo_model("segment-anything-2-hiera-tiny-image-torch")

# Full automatic segmentations

unique_view.apply_model(model, label_field="sam2_results")

In case you run out of memory, you can free up GPU space by clearing the cache with:

[ ]:

import torch

torch.cuda.empty_cache()

Bonus with SAM2#

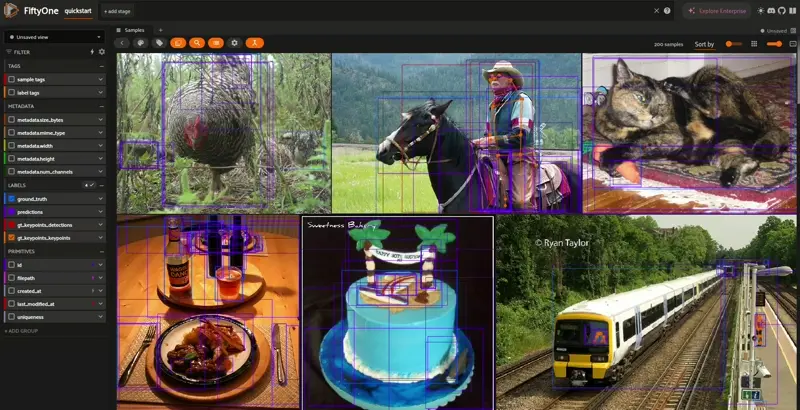

Prompting with Keypoints#

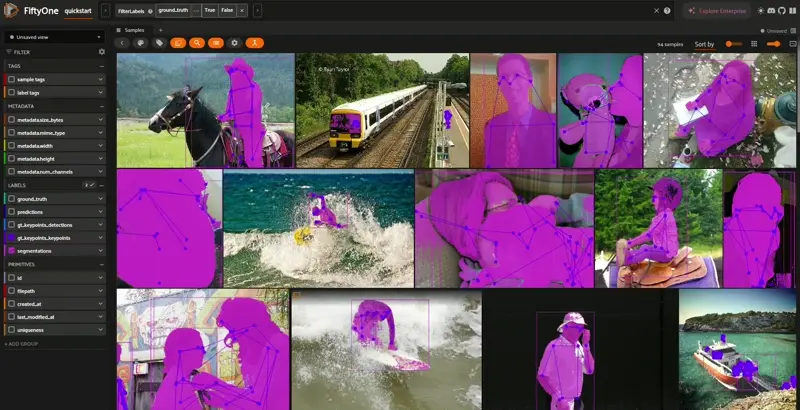

Keypoint prompts are a great alternative to bounding boxes when working with articulated objects like people. Here, we filter images to include only people, generate keypoints using a keypoint model, and then use those keypoints to prompt SAM 2 for segmentation.

[ ]:

from fiftyone import ViewField as F

# Filter persons only

dataset = foz.load_zoo_dataset("quickstart")

dataset = dataset.filter_labels("ground_truth", F("label") == "person")

# Apply keypoint detection

kp_model = foz.load_zoo_model("keypoint-rcnn-resnet50-fpn-coco-torch")

dataset.default_skeleton = kp_model.skeleton

dataset.apply_model(kp_model, label_field="gt_keypoints")

session = fo.launch_app(dataset)

[ ]:

# Apply SAM 2 with keypoints

model = foz.load_zoo_model("segment-anything-2-hiera-tiny-image-torch")

dataset.apply_model(model, label_field="segmentations", prompt_field="gt_keypoints_keypoints")

session = fo.launch_app(dataset)

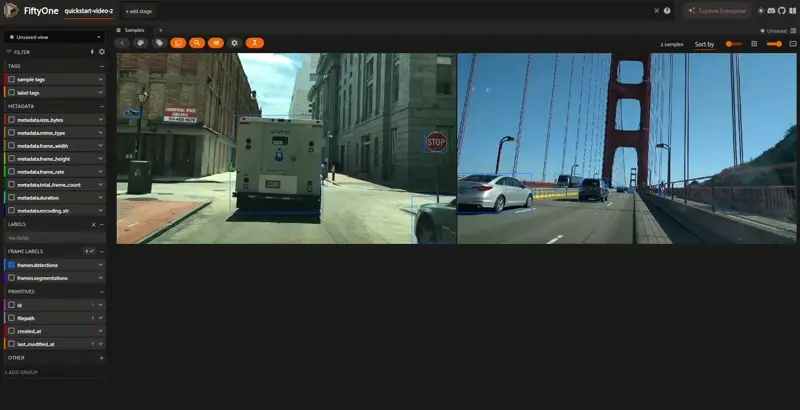

Using SAM 2 for Video#

SAM 2 brings game-changing capabilities to video understanding. It can track segmentations across frames from a single bounding box or keypoint prompt provided on the first frame. With this, you can propagate high-quality segmentation masks through entire sequences automatically.

[ ]:

dataset = foz.load_zoo_dataset("quickstart-video", max_samples=2)

from fiftyone import ViewField as F

# Remove boxes after first frame

(

dataset

.match_frames(F("frame_number") > 1)

.set_field("frames.detections", None)

.save()

)

session = fo.launch_app(dataset)

[ ]:

# Apply video model with first-frame prompt

model = foz.load_zoo_model("segment-anything-2-hiera-tiny-video-torch")

dataset.apply_model(model, label_field="segmentations", prompt_field="frames.detections")

session = fo.launch_app(dataset)

Available SAM 2 Models in FiftyOne#

Image Models:

segment-anything-2-hiera-tiny-image-torchsegment-anything-2-hiera-small-image-torchsegment-anything-2-hiera-base-plus-image-torchsegment-anything-2-hiera-large-image-torch

Video Models:

segment-anything-2-hiera-tiny-video-torchsegment-anything-2-hiera-small-video-torchsegment-anything-2-hiera-base-plus-video-torchsegment-anything-2-hiera-large-video-torch